|

Adaptive figure-ground classification |

INVESTIGATORS

Dr. Yisong Chen, Dr. Antoni Chan, et al.

KEYWORDS:

Image segmentation, Foreground extraction, Mean-shift, Classifier fusiong, KL-divergence, Mahalanobis distance.

BRIEF DESCRIPTION

We aim to build a foreground extraction framework that works out the result close to human perception. The underlying idea of our method stems from analyzing human vision mechanism in Ishihara color test. The following figure (a) gives an example color test plate.

(a) (b)Several interesting observations can be drawn from a careful investigation of how a human recognizes the numeral in this mosaic.

Observation1: First of all, the resolution of the plate image is not crucial to this test. Although finer pixel primitive gives higher image quality, a coarser granularity, like the small disks in the figure, will not prevent a human with normal color vision from tracing the contour of the foreground regions. Therefore, super-pixel acts as our desired primitive.

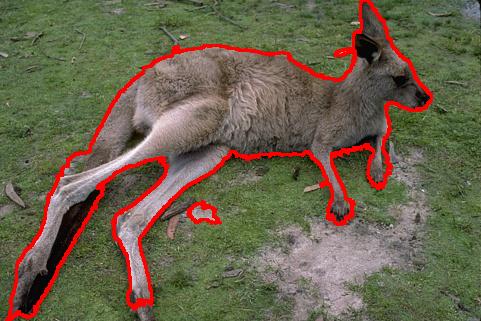

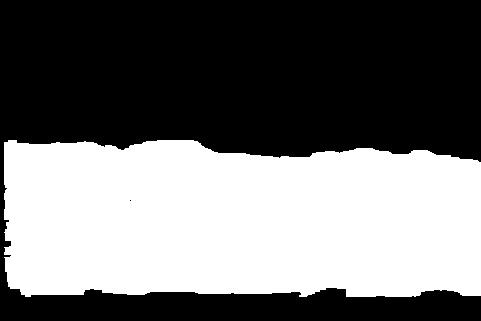

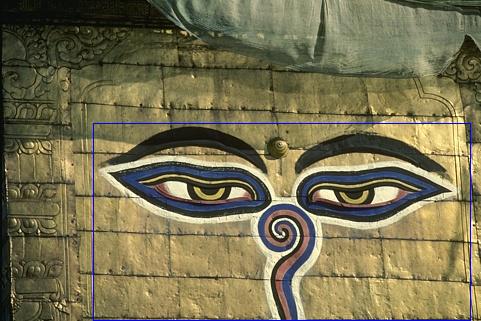

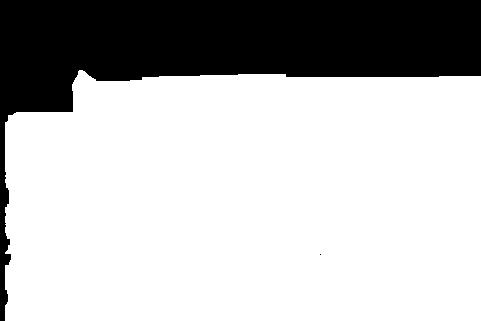

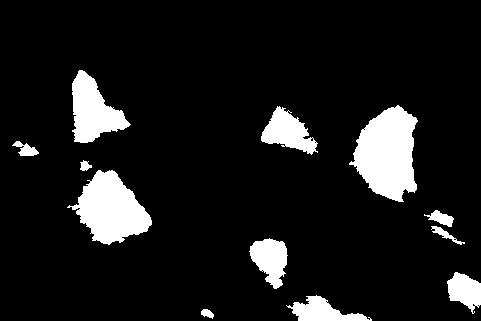

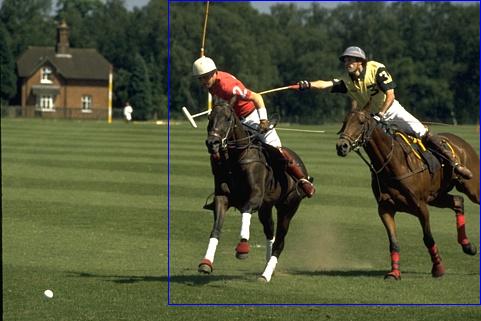

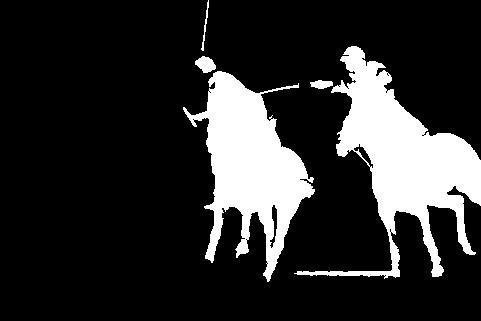

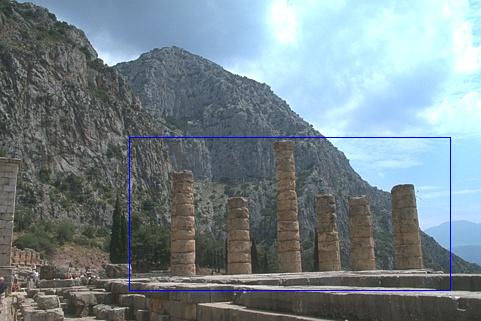

Starting from the above observations we formulate the task of foreground extraction as a foreground-background puzzle (f-g puzzle) solving procedure. Following are some result images. The work is extended to an enhanced figure-ground classification method with background prior propagation (EFG-BPP) in our TIP paper.

Observation2: Another very important observation is that, although the foreground (background) patches appear randomized in color and size, as a whole all the foreground patches are visually well apart from all the background patches. Moreover, the patches along the boundary of the plate fully characterize the color and spatial distribution of the global background. We believe this is the most important visual cue that enables the viewers to extract the foreground numeral from the background.

Observation3: A less clear but also easy-to-find fact is that, the seams between adjacent disks are of little help in this test, no matter whether they are inter-ones or intra-ones. Stated another way, if we convert the plate to a grayscale image as in figure (b), one fails to identify the foreground numeral, despite all the seams remain unchanged. This observation leads to the conclusion that, in general color and spatial cues transfer enough information for resolving the puzzle. An edge map may help by providing additional useful information. However, satisfactory results can often be reached uniquely by color and spatial cues.

Observation4: Although most people read the numeral “74” from the color test, some others may read “21”. This suggests the occurrence of more than one reasonable solution. This is also validated by the well-known fact that different viewers may give different segmentations of the same image. Therefore, the popular energy-minimization schemes that search for only one global optimum might not be suitable in a practical sense. A more plausible strategy is to allow the existence of multiple solutions corresponding to different understandings of the scene.

Observation5: A final observation is that, although the foreground region may be multiply connected and may contain holes, all the different connected components of the foreground and all the background holes can be correctly distinguished without difficulty. This implies that the connectivity property is not crucial in the context of foreground-background separating. We are in favor of a method equipped with the function of extracting such multi-connectivity, multi-hole foreground.

Following are some comparisons between f-g puzzle and grabcut under the same bounding box configuration. They reveal the power of the f-g puzzle in performing multi-connectivity, multi-hole foreground extraction.

Left: The original image with the bounding box; Middle: Grabcut result; Right: f-g puzzle result.

Left: The original image with the bounding box; Middle: Grabcut result; Right: f-g puzzle result.

APPLICATION

One example application of EFG-BPP is to automatically calculate object boundaries for Poisson blending. Poisson blending is a method for merging scenes from different images, but it generally needs a tight boundary around the foreground to achieve good performance if the backgrounds of the two images have different appearances. Using the input box, the object boundary can be computed by applying EFG-BPP, which greatly reduces the user's efforts for annotating the boundary. Some blending examples are shown below.

source target box blend EFG-BPP blend

Poisson blending examples. The boundary artifacts when using the box blending are removed by using EFG-BPP.

PUBLICATION & OPEN SOURCE CODE

1. Yisong Chen, Antoni B. Chan, Enhanced figure-ground classification with background prior propagation (EFG-BPP), to appear in IEEE Trans. Image Processing.

Here is an easy-to-use matlab full source code package for EFG-BPP. The code has been tested with MATLAB 7.x+ on windows 32bits and 64bits systems. You can download and run immediately. (2014/12/22)

2. Yisong Chen, Antoni B. Chan, Adaptive figure-ground classification, CVPR2012. pdf

Here is an easy-to-use matlab full source code package for the CVPR paper. The code has been tested with MATLAB 7.x+ on windows 32bits and 64bits systems. You can download and run immediately. (2012/12/10)

The package is free for academic use only. For details see the README file in the package.

Based on the above package, we provide an interactive figure-ground segmentation tool, written by Miss Xintong Yu. It features very practical multi-polygon mask assigment and on-the-fly probability map threshold tuning. Try and enjoy it.

Others

We are so grateful to Prof. Kenichi Kanatani for very beneficial discussions and advices.

We are looking for help and collabaration from more experts. Please feel free to contact me if you are interested in this work.

FUNDING AGENCY

N/A